Modifications to H&S are proposed to improve performance. The most discussed one is an edge/curvature aware smoothness constraint, i.e. a matrix with weights such that we can modify the Horn & Schunck energy to

noting that the here is a backward flow, is a matrix of partial derivatives:

are the images, and is the weight of the smoothness loss. H&S is a special case of this where . The proposed is approximately[^1: there is a slight change; see the last point of ‘other advancements’] the symmetric

where is a weighting parameter (discussed below). Note the presence of second order/curvature constraints as opposed to merely first-order constraints in typical edge-aware smoothness. The parameters used are (all discussed below).

A variety of other advancements are discussed as well. I think these will be useful for our method too in order to improve performance.

Tags

Smoothness constraints Energy or loss minimization NOT deep learning Old school Unsupervised Optical flow Unsupervised Second-order methods

Other advancements

- Delinearization. Delinearization, discussed by the derivative work BBPW, wherein the linearized constraint is replaced with the delinearized version . This, on its own, leads to a huge performance gain.

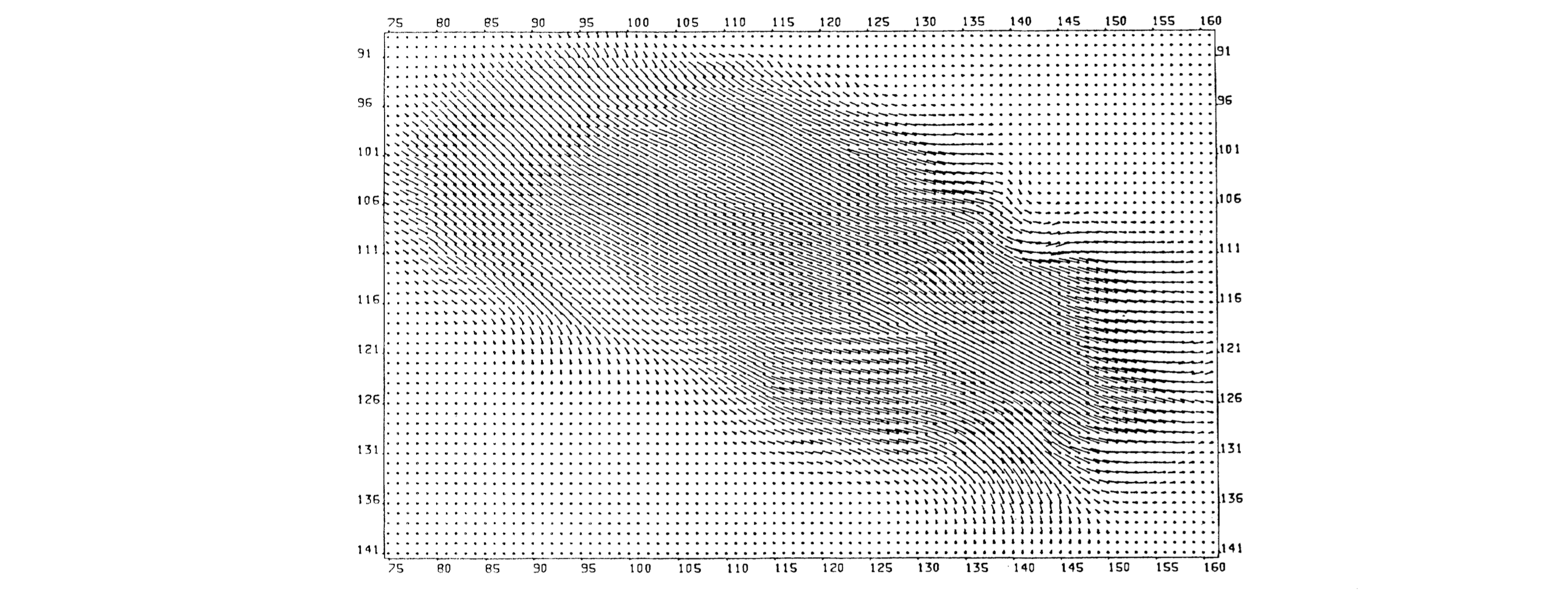

- Corners computed first. Corners with significant gray value variation are first computed, as their movement can be easily tracked, with the other areas are initially left blank. If are the eigenvalues of , corners are when (and, I assume, ), as contains values about the first- or second-derivatives at that point. The metric used to determine “cornerness” is During this computation, the smoothness weight is set to . Then, after this computation is complete, the edge flows are propagated inwards and is set upwards again.

- Smoothness scheduling. A schedule for the smoothness parameter which looks like this:![[Screen Shot 2023-08-31 at 20.13.21.png|center|450]]

- Temporal averaging for gradients. Improving gradients with temporal averaging, i.e. replace with (where is the current estimate for ).

- Setting a lower limit (5-12) for the eigenvalues of , above which the eigenvalues are rounded up.

- Some small thing about Beaudet’s operator which I think is related in spirit to my problem of needing GT flow to be precisely 0. I don’t really get it and I don’t think it’s super relevant.

- Something I get even less about adding something to the diagonal elements of .

Intuition for the weights

Scaling effect of a weight matrix

From H&S, we have the smoothness term

But we don’t care about all , etc. In directions with high visual contrast (gradients), we want to suppress the smoothness loss in favor of the photometric loss. Thus, if and are rough measures of the size of the gradients in the and directions, respectively, we have the nice property

Finding

We derive itself through another method. First, we begin with two heuristics.

- should be close to along the directions within an object. One such direction is the tangent to the object’s boundary; thus . We can write the norm of that expression as

- We can use a second-order constraint similar to the one above. That is, should be maximal in the direction of greatest curvature; thus it should be around zero in the direction perpendicular to that of greatest curvature. This leads us to minimize which leads to a similar metric

This leads us to a weighting matrix where and is some weighting parameter between these two objectives. However, we would like to normalize , as it might take on large values (especially being quadratic). Thus we divide by the determinant (recall that the derivative is the factor by which a matrix increases volume) to yield

which yields as above.

In connection with the argument above, note that the top-left entry in is all various derivatives/partials over , measuring variation in , and the bottom-right entry in is all various derivatives/partials over .

Cited

- Rotationally Invariant Image Operators, P.R. Beaudet—Can’t find the paper online

- 1981.H&S—Determining Optical Flow (Horn & Schunck, 1981)

Cited By

Return: index