This chapter from a book on computer vision techniques deals with gradient-based optical flow methods. There are discussions of the methods of L&K, H&S, robustness, probabilistic optical flow, and layer prediction with EM; however, there is a particular focus on local methods. All the techniques discussed are rather old-school.

Gradient-based methods

First, L&K (and similar methods) are covered. In order for brightness constancy to hold, a number of assumptions must hold true:

This is probably bad news for my ultra-sensitive calibration-needy current methods. It might mean that warping features is better (features that can account for brightness changes and such).

This is probably bad news for my ultra-sensitive calibration-needy current methods. It might mean that warping features is better (features that can account for brightness changes and such).

Each pixel’s gradient constraint imposes a line upon which must lie, which is perpendicular to . If we only consider one pixel, this does not give enough information to figure out where must be, but if we consider multiple nearby pixels, this can give us many constraints that we can satisfy to the optimal extent with least-squares. This yields

if . (The are partial derivatives of with respect to .)

Aperture problem

If is low rank, i.e. are all multiples of each other (think stripes), then we experience the aperture problem. When motion is only evident in one direction, the true optical flow cannot be discerned; for example, a barber’s pole looks like it’s moving upwards continually despite merely rotating around its central axis.

Increasing robustness

Robustness can be increased by applying a function to the least-squares objective; instead of using where , we can minimize the sums of something like

where is some predefined limiting constant.

Parameterized flows

A variety of ways to parameterize local flows are discussed. In addition to pure translational motion , we can also use affine transform , or even pick parameters from a subspace of pre-defined optical flows.

Adding iteration to the gradient solution

Especially for nonlinear cases (i.e. pretty much all cases), iteration will improve the solution. Similar to the view of L&K, we can view each further step of iteration as merely modifying the existing prediction of the flow. This reminds of diffusion quite a bit. In particular, we can even go further to the following pretty interesting formulation:

I was thinking about this idea of applying optical flow repeatedly as addition too; I think it’s pretty cool.

Coarse to fine generation

There is a small section discussing how coarse-to-fine generation can address temporal aliasing. I’m not really sure what’s going on here unfortunately. But some sort of coarse-to-fine element seems important in any approach.

Global methods

Next, some global methods (especially H&S) are discussed. Global smoothing methods involve energy functionals, such as the one of H&S:

It is mentioned that global methods can fill in textureless regions. Beyond just H&S, some advancements in the energy functional are discussed:

Especially given the deep learning/loss context I think the global methods will be more useful. However, I’m a bit curious what exactly these stronger local constraints may be. The main drawback of global methods is said to be computation efficiency, which is now solved by virtue of being twenty years in the future!

Conservation assumptions

Gradient constancy

There is the classic brightness consistency ones, but some higher-order ones may provide better behavior (as well as just more constraints). The gradient conservation criterion used in BBPW is mentioned here; it provides two constraints at each pixel:

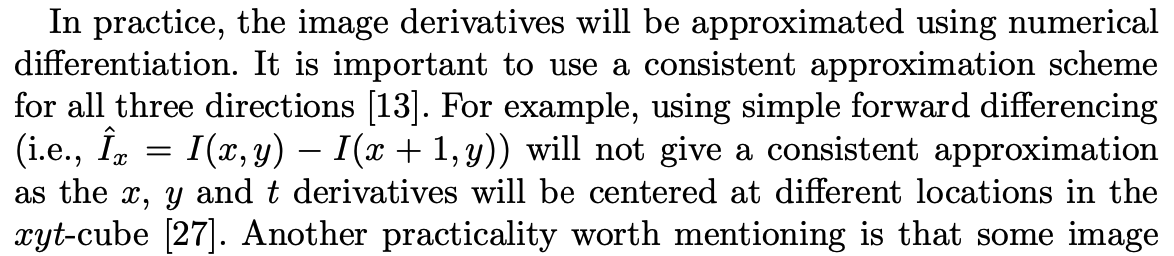

However, estimating derivatives is quite pernicious (even first-order derivatives can be pretty suspect!) It is also laced with subtleties, as described here:

However, estimating derivatives is quite pernicious (even first-order derivatives can be pretty suspect!) It is also laced with subtleties, as described here:

Moreover, this assumption may not make as much sense as it seems. For example, if there is a rotating pattern with bright spots and dark spots, the rotation of the pattern will cause the gradient to rotate as well, thus violating the gradient assumption. Shear/stretch/scale may also cause this; in the chapter, any “first-order deformation” will thus mutate the gradient, causing this assumption to no longer hold true.

Phase-based methods

There is a discussion of phase-based methods. I don’t really get it, but I want to read more about it; it seems extremely interesting. I’m not sure if it’s because one of the authors of this book was one of the authors of the phase-based constancy paper, but it seems to really upsell this technique, and I’m quite curious.

Probabilistic formulations

I’ve been thinking about this for a long time, so this is super exciting to me. I don’t think they have an explicit model of for each flow prediction , but there is this really cool part:

The mentioned condition (1.16) is

The mentioned condition (1.16) is

Thus this can give us an estimate of the “likelihood” of this exact . However, as it still depends on only local constraints, it seems like this is not exactly what I was thinking of. But there is likely cool prior work on probabilistic optical flows. If there is some way to walk “up” the gradient of above, that may also be a cool approach.

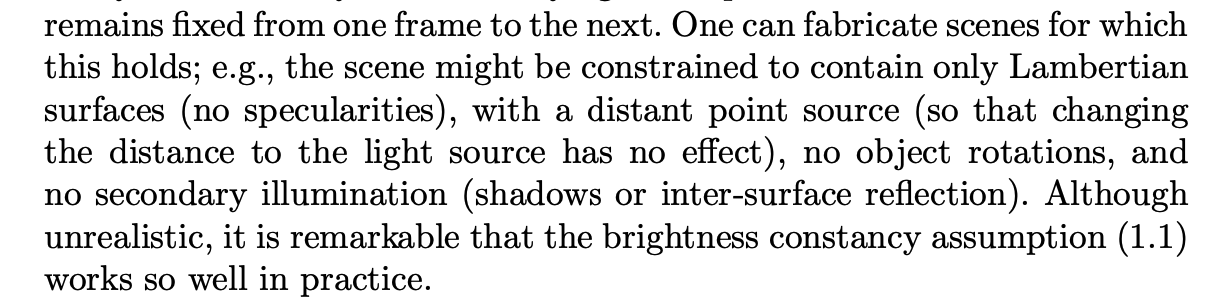

Layered models

Finally, there is a discussion on layered models, where EM/mixture models are used to estimate the layers for each pixel. It is based off the prior section in that we now need estimates of the probability of each warp. I didn’t find this section very interesting though, so I didn’t really read it in detail.

Tags

Book chapters, review articles Old school Optical flow Unsupervised Local methods Repeated warping Multi-scale pyramids, coarse-to-fine Robustness to noise Affine, parameterized optical flow Flow subspaces Probabilistic flow Layered images Expectation-maximization NOT deep learning Gradient constancy Phase constancy Smoothness constraints

Cited

- 1981.H&S—Determining Optical Flow (Horn & Schunck, 1981)

- 1981.L&K—An Iterative Image Registration Technique with an Application to Stereo Vision (Lucas & Kanade, 1981)

- 1985.A&B—Spatiotemporal energy models for the perception of motion (Adelson & Bergen, 1985)

- 1990.F&J—Computation of Component Image Velocity from Local Phase Information (Fleet & Jepson, 1990)

- 1991.Prob—Probability Distributions of Optical Flow (Simoncelli… 1991)

- 1994.BFB—Performance of Optical Flow Techniques

- Motion illusions as optimal precepts (Weiss… 2002)

- Differentiation of discrete multi-dimensional signals (Farid & Simoncelli, 2004)

- Note: not many citations, probably just read it quickly

- Lucas/Kanade meets Horn/Schunck: Combining local and global optic flow methods. (Bruhn… 2005)

Cited By

ROOT

Return: index